Artificial Intelligence is evolving at a lightning pace. From clever chatbots to complex autonomous agents, AI is everywhere we look. But let’s be honest: many seemingly smart AI systems fall flat not because the underlying model is weak, but because we haven’t given them the necessary info. They lack the right context.

Context

Background information that an AI system uses to understand a request, resolve ambiguity, and generate an appropriate response.

Context Window

The context window of a Large Language Model (LLM) refers to the maximum amount of text (input + output) that the model can “see” or process at one time. This includes:

- Your prompt

- Any context you provide (documents, examples, previous messages)

- The model’s own response

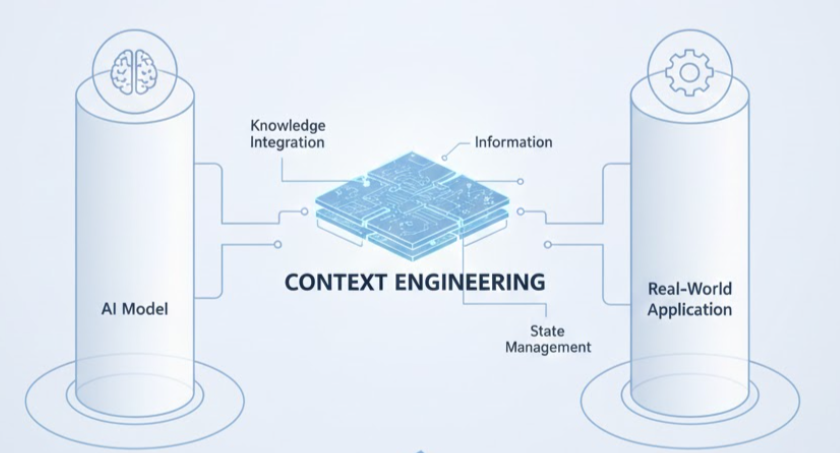

Context Engineering – the next big shift in AI development. It’s the essential upgrade taking over right where prompt engineering leaves off.

From Prompt Engineering to Context Engineering: What’s the Difference?

You’ve probably heard of Prompt Engineering. That’s the craft of writing the perfect input query to coax the best output from a model. Think of it as mastering the art of asking a question. A great prompt guides the AI, sets the mood, and defines the format.

But as AI moves out of the demo stage and into real-world applications, a single, beautifully worded prompt just isn’t enough.

Complex tasks demand persistent knowledge:

What did the user say two minutes ago? What data sources should I trust? What’s the current system state?

That’s where Context Engineering steps in. Prompt engineering is about phrasing; context engineering is about building the world the AI lives in.

| Prompt Engineering | Context Engineering |

|---|---|

| It’s about how you ask. | It’s about what the model already knows. |

| Often manual & task-specific. | Systematic, scalable, and dynamic. |

| Works for simple, one-off tasks. | Essential for multi-step, real-world workflows. |

| Relies on word finesse. | Relies on curated data pipelines and memory |

Why Context Engineering Is the Game Changer

Imagine you ask a financial AI assistant for stock recommendations. A basic prompt might get you a generic, “Maybe try index funds?” kind of answer.

But if that AI has access to your actual portfolio, real-time market trends, recent news, and your personal risk tolerance, it can give you precise, actionable advice.

Why Context Engineering Is the Game Changer

Imagine you ask a financial AI assistant for stock recommendations. A basic prompt might get you a generic, “Maybe try index funds?” kind of answer.

But if that AI has access to your actual portfolio, real-time market trends, recent news, and your personal risk tolerance, it can give you precise, actionable advice.

Context engineering is how we give AI systems their superpowers:

- Reliability you can trust – It drastically cuts down on frustrating “hallucinations” and irrelevant outputs.

- Scalability – The system can handle messy, real-time data and multi-step workflows without breaking a sweat.

- Enterprise-Grade Ready – It safely integrates with secure databases, internal APIs, and sensitive user data.

- True Intelligence – The AI maintains a “memory,” reasons over past actions, and uses external tools like a calculator or a calendar API.

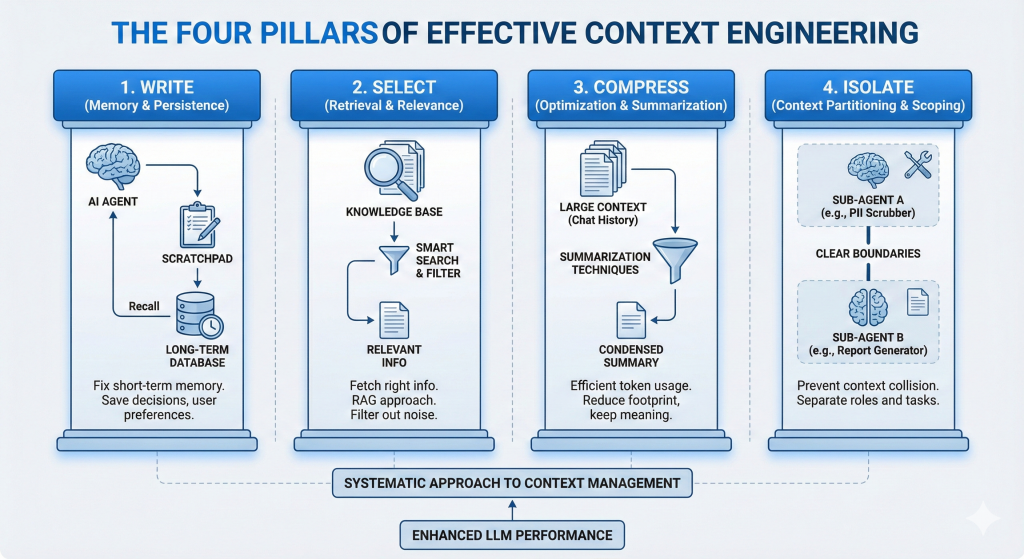

The Four Pillars of Effective Context Engineering

It’s not just about dumping a massive text file into the prompt window. It requires a systematic approach to managing the flow and quality of information the LLM sees.

Effective context engineering is built upon these four fundamental pillars:

Write (Memory and Persistence)

This is how we fix the AI’s short-term memory problem. It’s about giving the agent the ability to remember things after a conversation ends or an action is taken.

In Action: The AI uses a “scratchpad” to work out complex reasoning steps, logs key decisions, or saves user preferences into a long-term database to remember you next time you log in.

Select (Retrieval and Relevance)

We need to fetch the right information at the right time. This is the heart of the RAG (Retrieval-Augmented Generation) approach.

In Action: Using smart search techniques to pull specific company policies or technical documents from a knowledge base, filtering out the 99% of data that isn’t relevant to the current question.

Compress (Optimization and Summarization)

The AI’s context window is valuable. We have to be efficient and reduce the token footprint without losing critical meaning.

In Action: Instead of feeding the AI 50 pages of chat history, you use clever summarization techniques to condense that history into a single, clean paragraph of key points

Isolate (Context Partitioning and Scoping)

This principle involves preventing “context collision” by partitioning different types of information or different agent roles into separate, distinct contexts.

- In Action: Using separate sub-agents for specialized tasks (e.g., a “PII scrubber” agent and a “report generator” agent), or defining clear boundaries for tool usage to ensure the AI stays focused.

Good Prompts vs Bad Prompts

Even with rich context, prompts matter. Context engineering provides the environment, but prompt engineering provides the specific instruction set. Here’s how to tell the difference:

Good Prompts

- Clear, concise, and unambiguous.

- Provide examples or role definitions when helpful.

- Specify output format (“JSON”, “bullet points”, etc.).

Bad Prompts

- Vague or overly broad (“Explain this”).

- Contradictory or cluttered instructions.

- Rely on AI “magic” without grounding.

Remember: good prompts + rich context = optimal AI output.

Why Context Engineering is the Future of AI

AI is no longer about clever prompts or isolated answers. Real-world applications demand robust, scalable, data-driven systems.

Context engineering grounds AI in reality, reduces errors, and allows models to reason over relevant data.

Enterprises adopting context engineering can create trustworthy AI products that perform reliably under changing conditions.

Prompt engineering builds the question; context engineering builds the world in which the AI answers it.

Conclusion

Prompt engineering was the first wave — the art of asking AI the right question.

Context engineering is the next wave — the science of giving AI the right knowledge, environment, and memory to answer it wisely.

In today’s AI-driven world, context engineering isn’t just a nice-to-have — it’s the foundation for building intelligent, reliable, production-grade AI systems.